Salvador DaBot, the portraitist robot

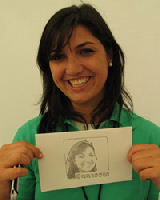

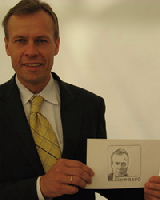

The Portraitist Robot recognizes human faces in its surroundings, takes a snaphot, and extracts relevant characteristics from the recorded image. It then draws portraits of the participants from the captured images by converting them into vector art, and by using inverse kinematics to control the robot's arm.

You can find previous videos of the Portraitist Robot v1.0 here and here. The Portraitist Robot v2.0 is drastically more advanced than v1.0: it now has a moustache and a beret!

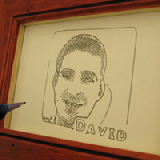

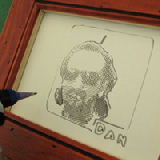

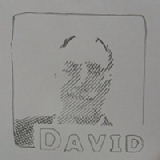

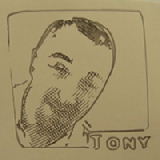

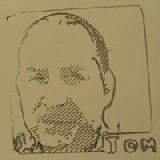

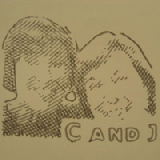

Contours of the faces are first extracted from the images captured through the robot's internal cameras. The contours are then converted to paths by organizing them with respect to their lengths.

Then, the image is segmented into several shades of gray. The contours and extracted areas are simplified to keep only the important features of the face and make it look similar to a cartoon representation. The different shades of gray will be reproduced by the robot by adding several layers of drawing patterns that are painted by the robot. These patterns are designed to make the painting look more artistic. The different areas are filled similarly as a human would do by recursively starting from one point, filling the current area, and jumping to another area when it is finished.

Finally, a robust inverse kinematics controller is used to convert the 2D drawing into a set of joint angles that are run on the robot.

To look more human-like, motions of the left arm and head have been recorded through the use of motion sensors attached to the body of a person demonstrating the gesture to the robot. This process is indeed highly relevant as even when standing in a fixed posture, humans are producing small oscillations which are important to reproduce gestures that look natural and which can be reproduced on the robot to make it look more lively.

Apart from promoting Robotics as being a fun and interesting research area, this work also aims at showcasing the capabilities of the HOAP-3 robot as well as the integration of different motor and sensory components such as vision processing, clustering, human-robot interaction, speech synthesis, inverse kinematics and redundant control of humanoid robots.

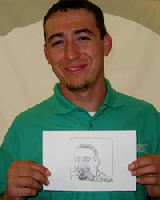

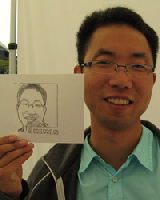

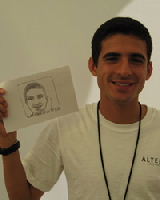

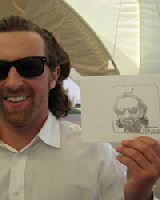

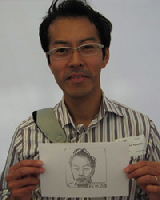

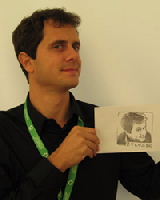

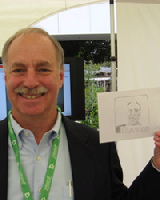

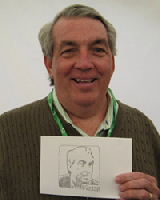

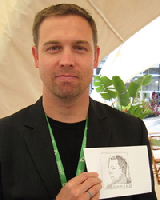

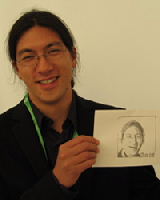

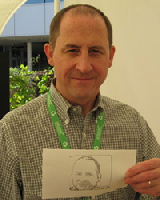

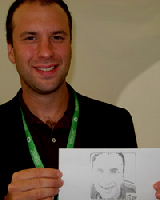

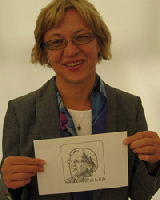

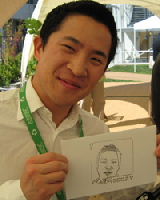

Here are the first masterpieces of our mustachioed artist created during the Google Zeitgeist 2008 event.